AI Attribution Fails in 90 Days. This 4-Step Rollout Works.

A practitioner's guide to implementing AI-powered attribution that actually survives contact with reality

Let me tell you what usually happens.

A marketing team gets excited about AI-powered attribution. They've read the case studies. They've seen the vendor demos. They sign the contract, flip the switch, and wait for the insights to roll in.

Ninety days later, nobody trusts the numbers. The model says paid social deserves 40% of conversion credit, but that doesn't match what the sales team sees on the ground. Leadership asks why they spent six figures on a system that contradicts their intuition. The attribution platform becomes another expensive dashboard nobody opens.

I've watched this pattern unfold at pharmaceutical companies, financial services firms, and e-commerce businesses. The technology works. The implementation doesn't.

The gap isn't capability. It's rollout methodology.

AI attribution requires a phased approach that builds organizational trust incrementally. You can't replace your entire measurement framework overnight and expect anyone to believe the output. You need a systematic process that validates results at each stage before expanding scope.

Here's the four-step framework that actually works.

Step 1: Establish Your Data Foundation (Weeks 1-4)

Every AI attribution failure I've investigated traces back to the same root cause: garbage data producing garbage insights.

Attribution models ingest touchpoint data from your CRM, marketing automation platform, ad networks, website analytics, and potentially dozens of other sources. If those sources contain duplicates, inconsistent formatting, or fragmented customer records, your attribution model will confidently assign credit to the wrong channels.

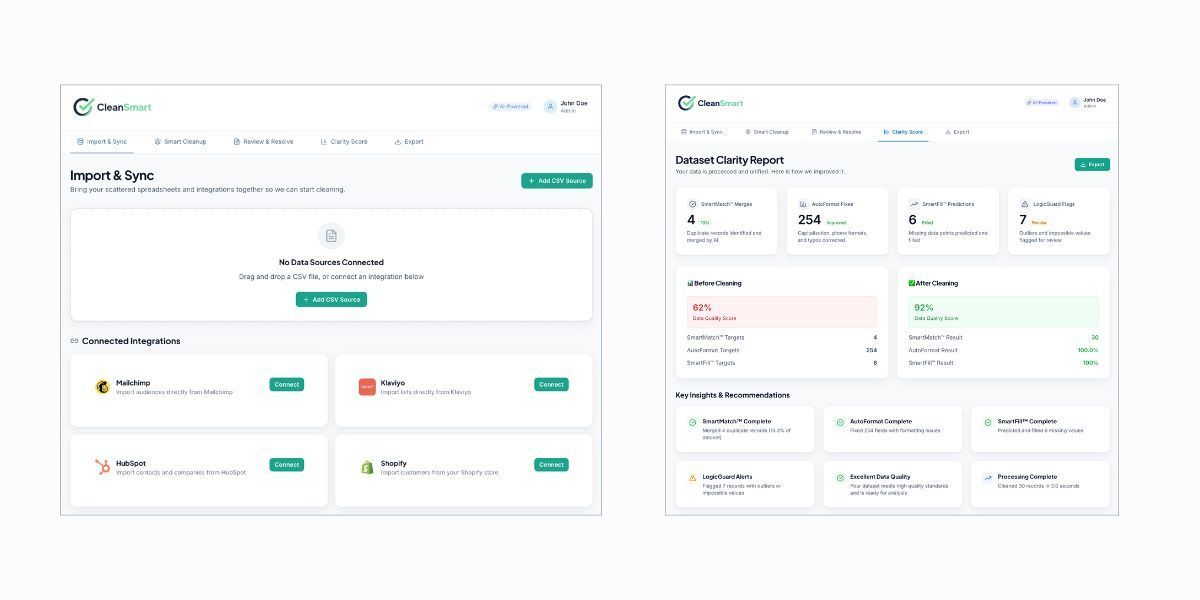

Consider what happens when a single customer exists as three separate records in your CRM. They clicked a Facebook ad as "Jon Smith," attended a webinar as "John Smith," and converted through email as "J. Smith at Acme Corp." A traditional attribution model sees three different people. Your AI model might be smart enough to connect them, or it might not. Either way, you're starting with compromised data.

Before implementing any attribution technology, audit your data quality across four dimensions.

- Completeness: What percentage of records have populated values for the fields your attribution model needs? Missing UTM parameters, blank campaign fields, and incomplete contact records all create blind spots.

- Consistency: Are the same values formatted the same way across systems? "United States," "US," "USA," and "U.S.A." might mean the same thing to a human, but they fragment your data for analysis.

- Accuracy: Do the values reflect reality? Outdated job titles, wrong company associations, and stale email addresses introduce noise that corrupts model training.

- Uniqueness: How many duplicate records exist? Duplicates don't just inflate your database costs. They fragment customer journeys and make accurate attribution mathematically impossible.

If your data quality scores poorly on any of these dimensions, fix that first. Implementing AI attribution on a broken data foundation is like installing a GPS system in a car with no wheels. The technology is sophisticated. It's just not going anywhere.

For organizations struggling with data quality at scale, this is where automated data cleaning tools become essential. Manual cleanup doesn't scale, and your attribution model will only be as accurate as the data feeding it. I built CleanSmart specifically because I kept seeing attribution projects fail at this prerequisite step. Semantic duplicate detection finds the "Jon Smith / John Smith" matches that string matching misses. But whether you use CleanSmart or another solution, don't skip the data foundation work.

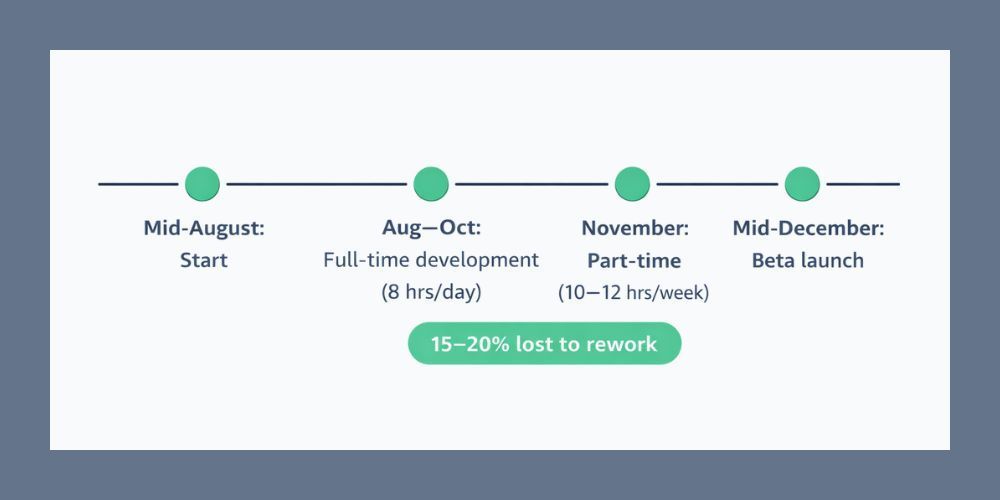

This phase typically requires three to four weeks for mid-sized organizations. Larger enterprises with complex data ecosystems may need longer. Resist the pressure to rush. Every week invested here saves months of troubleshooting later.

Step 2: Run a Controlled Pilot (Weeks 5-10)

Once your data foundation is solid, resist the temptation to deploy AI attribution across your entire marketing operation. Start with a controlled pilot that limits scope while maximizing learning.

Select a single channel or campaign type for your initial implementation. Ideal pilot candidates share three characteristics.

- High volume: You need enough conversions to generate statistically meaningful insights. A campaign producing five conversions per month won't give your AI model enough signal to learn from.

- Clear measurement: Choose something with well-defined success metrics. Lead generation campaigns with form submissions work better for pilots than brand awareness campaigns with fuzzy engagement metrics.

- Contained complexity: Avoid campaigns that span dozens of touchpoints across multiple business units. Start simple. Complexity comes later.

During the pilot phase, run your AI attribution model in parallel with your existing measurement approach. Don't replace your current reporting. Augment it. This parallel operation serves two purposes.

First, it builds organizational trust. When your AI model and your existing reports agree, stakeholders gain confidence in the new system. When they disagree, you have an opportunity to investigate why and determine which measurement approach better reflects reality.

Second, it reveals calibration needs. AI attribution models require tuning. Your pilot phase is where you discover that the model over-weights certain touchpoints, under-counts specific channels, or produces anomalies that require investigation. Better to find these issues in a controlled pilot than after full deployment.

Document everything during this phase. What surprised you? Where did the AI insights contradict conventional wisdom? What validation did you perform to determine which perspective was correct? This documentation becomes invaluable when expanding beyond the pilot.

A well-run pilot typically requires six weeks. Two weeks for initial deployment and configuration. Two weeks of parallel measurement. Two weeks for analysis, calibration, and stakeholder review.

Step 3: Expand Incrementally (Weeks 11-20)

With a successful pilot complete, you can begin expanding AI attribution to additional channels and campaign types. The key word is "incrementally."

I've seen organizations attempt to flip the switch from pilot to full deployment overnight. It fails almost every time. Stakeholders who weren't involved in the pilot don't trust the numbers. Edge cases that didn't appear in your controlled environment suddenly dominate the data. The model that worked beautifully for paid search produces nonsensical results for event marketing.

Instead, expand in deliberate phases. Add one channel or campaign type at a time. Allow two to three weeks for each expansion to stabilize before adding the next.

Prioritize expansion based on business impact and measurement complexity. High-spend channels deserve attention first because the ROI of accurate attribution is highest there. Simpler channels should precede complex ones because they're more likely to validate successfully.

A typical expansion sequence might look like this:

- Phase 1 (pilot): Paid search campaigns

- Phase 2: Paid social campaigns

- Phase 3: Email marketing programs

- Phase 4: Content marketing and organic search

- Phase 5: Event marketing and offline touchpoints

- Phase 6: Partner and affiliate channels

Each expansion phase follows the same parallel measurement protocol from your pilot. Run the AI model alongside existing measurement for two weeks. Investigate discrepancies. Calibrate as needed. Only after validation do you begin using the AI insights for actual decision-making.

This incremental approach takes longer than a big-bang deployment. It also actually works. Organizations that follow this methodology report significantly higher stakeholder adoption and sustained usage of their attribution systems.

Step 4: Operationalize for Continuous Improvement (Ongoing)

Attribution isn't a project. It's a capability. The final step transforms your AI attribution system from a one-time implementation into an operational discipline.

Establish governance. Who owns the attribution model? Who has authority to modify configuration? Who resolves disputes when attribution insights conflict with stakeholder intuition? These questions seem administrative until they become urgent. Answer them before that happens.

Define intervention thresholds. AI attribution enables real-time optimization, but not every insight should trigger immediate action. Establish clear thresholds for when attribution data justifies budget reallocation. Minor fluctuations in channel performance shouldn't cause daily budget whiplash. Significant, sustained shifts should prompt review.

One approach that works well: AI makes automated adjustments within defined parameters (say, plus or minus 10% budget shifts between channels). Larger reallocations require human review and approval. This "human-in-the-loop" protocol balances AI speed with organizational judgment.

Create feedback loops. Attribution insights should flow to the teams that can act on them. If your model reveals that webinar attendance strongly predicts conversion, your content team needs to know. If paid social performs better on weekends, your media team needs that insight operationalized into their scheduling.

Build recurring touchpoints where attribution insights get translated into tactical recommendations. Weekly is ideal for fast-moving channels. Monthly works for longer-cycle campaigns. The cadence matters less than the consistency.

Schedule regular model reviews. Customer behavior changes. Channel effectiveness shifts. Competitive dynamics evolve. An attribution model calibrated in January may produce misleading results by July. Quarterly reviews of model performance against business outcomes catch drift before it corrupts decision-making.

Document your methodology. People leave. Priorities shift. The institutional knowledge embedded in your attribution system shouldn't walk out the door when a key team member departs. Maintain clear documentation of model configuration, calibration decisions, and operational protocols.

This operationalization phase never truly ends. It's the ongoing work that transforms AI attribution from an expensive experiment into a genuine competitive advantage.

Why Most Implementations Still Fail

Even with a solid framework, AI attribution projects fail for predictable reasons. Knowing these failure modes helps you avoid them.

Expecting perfection instead of improvement. AI attribution will never achieve 100% accuracy. Customer journeys are too complex, data is too incomplete, and human motivation is too opaque. The goal isn't perfect measurement. It's better measurement than you had before. Organizations that reject AI attribution because it isn't perfect often stick with last-click models that are dramatically worse.

Underinvesting in change management. Attribution changes how credit gets assigned. That means some teams will look better under the new model, and some will look worse. The team whose channel suddenly receives less attribution credit will question the methodology. Prepare for these conversations. Bring stakeholders into the process early. Make the logic transparent. Resistance is normal. Unaddressed resistance kills adoption.

Treating attribution as a technology problem. The technology is the easy part. Vendor platforms are sophisticated, well-documented, and supported by implementation teams. The hard part is organizational: aligning stakeholders, establishing governance, building trust in new measurement approaches, and operationalizing insights into action. Teams that focus exclusively on technology selection and configuration often discover that their perfectly implemented system sits unused.

Ignoring the data foundation. I keep returning to this point because it matters that much.

Poor data quality corrupts attribution more than any other factor. The model is doing exactly what it was designed to do. It's just operating on inputs that don't reflect reality. Fix the inputs.

What Real-Time Actually Means

A note on "real-time" attribution, since the term gets thrown around loosely.

Real-time doesn't mean your attribution model updates every millisecond. It means your insights are current enough to inform decisions while those decisions still matter.

For some organizations, real-time means intraday optimization. They're adjusting ad spend multiple times daily based on performance patterns that emerge throughout the day. For others, real-time means next-day reporting instead of waiting for monthly summaries.

The appropriate latency depends on your decision cycles. Ask yourself: how quickly can we actually act on attribution insights? If budget reallocation requires a week of approvals, sub-hourly attribution updates don't help you. Match your attribution cadence to your operational reality.

Organizations with mature attribution practices often find that reducing insight-to-action latency produces more value than improving model accuracy. Getting a good-enough answer fast beats getting a perfect answer too late to matter.

Connecting Attribution to Your Broader Stack

AI attribution doesn't exist in isolation. It connects to your broader MarTech ecosystem and should inform strategy across multiple functions.

Campaign planning: Attribution insights should shape where you invest next quarter, not just explain what happened last quarter. If your model consistently shows that certain channel combinations outperform others, build that intelligence into your media planning process.

Content strategy: Which content types appear most frequently in high-value conversion paths? Attribution can reveal that case studies outperform blog posts for enterprise buyers, or that video content accelerates mid-funnel progression. Feed these insights to your content team.

Sales alignment: For B2B organizations, marketing and sales alignment depends on shared measurement. Attribution provides a common language for discussing which marketing activities generate pipeline versus which generate noise.

Executive reporting: Leadership wants to know whether marketing spend produces returns. Attribution, properly implemented, provides defensible answers. Build executive dashboards that translate attribution insights into business outcomes like customer acquisition cost, pipeline contribution, and revenue influence.

The organizations that extract the most value from AI attribution treat it as a connective layer across their marketing operation, not as a standalone measurement tool.

Getting Started This Week

If you're ready to move forward, here's where to begin.

This week: Audit your current data quality. Pull a sample of 1,000 contact records and evaluate completeness, consistency, accuracy, and uniqueness. Be honest about what you find.

This month: Select your pilot scope. Identify the campaign type or channel that meets the criteria outlined above: high volume, clear measurement, contained complexity.

This quarter: Run your pilot with parallel measurement. Document findings. Calibrate the model. Build stakeholder confidence through demonstrated accuracy.

Next quarter: Begin incremental expansion to additional channels. Establish governance and operational protocols. Transition from project mode to capability mode.

The framework isn't complicated. Execution requires discipline, patience, and organizational alignment. But the organizations that get this right gain a genuine advantage: the ability to understand what's working, reallocate resources accordingly, and optimize continuously while competitors are still waiting for last month's reports.

How long does AI attribution implementation typically take?

A complete implementation from data foundation through operationalization typically requires 20-24 weeks for mid-sized organizations. Rushing this timeline usually produces implementations that fail to achieve stakeholder adoption. The phased approach described here takes longer upfront but produces systems that actually get used.

What's the minimum data volume needed for AI attribution?

Most AI attribution models require at least 1,000 monthly conversions to generate statistically reliable insights. Organizations with lower conversion volumes may need to aggregate data over longer time periods or focus on micro-conversions (like form submissions or content downloads) before attempting to model final purchase attribution.

How do I handle attribution for offline touchpoints like events or sales calls?

Offline touchpoint attribution requires deliberate data capture. Event attendance, sales call logs, and direct mail responses must be systematically recorded and connected to customer records. Many organizations implement this through CRM integration, where sales teams log activities that feed into the attribution model. The data foundation work in Step 1 should include protocols for capturing offline interactions.

Author: William Flaiz