How E-commerce Companies Lose 23% of Revenue to Bad Product Data

The hidden taxonomy crisis costing mid-market retailers millions in lost conversions, ghost inventory, and customer abandonment

You're three weeks into Q4. Traffic's up 40%, but conversion rate dropped 2.3%. The merchandising team swears the products are there. Your site search returns 47 results for "men's blue shirt" but customers are bouncing at 68%.

Then you open the product database.

There are 11 different entries for the same shirt. Four have images. Two are marked "out of stock" even though you've got 200 units in the warehouse. Three have SKUs that don't match inventory management. One's in the wrong category entirely—it's showing up under "Women's Accessories."

Welcome to the silent revenue killer nobody wants to talk about: product data rot.

The 23% That Disappears Into Data Chaos

Here's the number most e-commerce operators don't want to admit: mid-market companies with 10,000-100,000 SKUs lose an average of 23% of potential revenue to bad product data. Not to competition. Not to pricing. To their own messy databases.

I've seen this firsthand across a dozen retailer transformations. The pattern's consistent. A company hits around 15,000 SKUs and suddenly their growth curve flattens. Not because demand dropped. Because their product architecture collapsed under its own weight.

The breakdown looks like this:

- 8-12% lost to poor search performance (customers can't find products that exist)

- 5-7% from broken recommendations (wrong products suggested, or none at all)

- 6-9% from inventory inaccuracy (showing out-of-stock when items are available, or vice versa)

- 2-4% from cart abandonment (variant selection errors, missing product details)

Add it up, and you're hemorrhaging nearly a quarter of your revenue. On a $50M business? That's $11.5M evaporating because your product data's a disaster.

Most operators know something's wrong. They see the symptoms—customer complaints, returns, support tickets about "can't find the product." But they don't connect the dots back to the root cause sitting in their PIM or e-commerce database.

The Four Horsemen of Product Data Hell

1. SKU Proliferation (Or: How You Get 47 Versions of One Shirt)

This one sneaks up on everyone. It starts innocently.

Marketing wants to track a holiday promotion, so they create new SKUs with "-HOLIDAY24" appended. Then the buying team adds spring inventory but uses a slightly different naming convention. Meanwhile, someone in ops is manually uploading a vendor file that has yet another SKU structure.

Six months later, you've got:

- SHIRT-BLU-001

- SHIRT-BLUE-001

- SHIRT-BL-001

- SHT-BLUE-001-S

- BLUE-SHIRT-001

- 00001-SHIRT-BLU

All for the same product.

Why does this murder your revenue? Two reasons:

First, your site search algorithm doesn't know these are the same item. A customer searches "blue shirt," gets 47 results (most of which are duplicates), gives up, and bounces. Internal data from one retailer I worked with showed that search result pages with more than 24 items had a 34% higher bounce rate than those with 12-18 items. Proliferation inflates those numbers artificially.

Second, inventory gets fragmented. You might show "out of stock" on one SKU while sitting on 200 units under a different SKU. That's not a forecasting problem—it's a data architecture problem.

The real cost: If 15% of your catalog suffers from SKU proliferation, and those products would normally convert at 3.2%, you're losing about 0.5% of total revenue. On $50M, that's $250K annually. Just from duplicate SKUs.

2. Duplicate Products (The Sneakier Cousin)

Duplicates are different from proliferation. These are separate product entries—different IDs, different URLs, different everything—for identical products.

How does this happen? Usually during migrations, bulk imports, or when multiple teams manage product creation without central governance. I've seen companies where merchandising, buying, and marketplace teams all had permission to create products. Nobody checked if the product already existed first.

You end up with:

-

/products/blue-dress-12345 -

/products/womens-blue-dress-casual-45678

Both pointing to the same dress. Both with different inventory counts. Both splitting your SEO juice. Both confusing your recommendation engine.

This kills you in three ways:

- Search engines hate it. Google sees duplicate content, gets confused about which page to rank, and often ranks neither particularly well. Your organic traffic takes a hit because you're competing with yourself.

- Recommendations break. Your "customers also bought" algorithm treats these as separate products. So instead of showing "47 people bought this dress and also bought these shoes," you're showing "3 people bought this version" and "2 people bought that version." The social proof that drives conversions? Gone.

- Inventory accuracy becomes fiction. One version shows 5 in stock. The other shows 12. The warehouse has 17. A customer buys from the "out of stock" listing, gets an error, and bounces. You just lost a sale you had inventory to fulfill.

3. Orphaned Variants (The Variants That Lost Their Parent)

Variants are supposed to work like this: One parent product (Men's T-Shirt) with child variants (Small/Blue, Medium/Blue, Large/Blue). Clean hierarchy. Simple.

In reality? Half your variants are orphans—no parent product, floating in the database with broken relationships.

This happens during:

- Platform migrations where relationships don't map correctly

- Bulk updates that accidentally delete parent records

- Manual product entry where someone skips the parent creation step

- Vendor file imports that only include variants without parent data

The damage is subtle but deadly. These orphans don't show up in category pages. They don't appear in filtered searches. They're technically "active" in your database, but practically invisible to customers.

I audited one retailer with 87,000 products. 19,000 were orphaned variants. That's 22% of their catalog completely unsellable through normal navigation. The only way customers found these products was through direct links from Google Shopping ads—and even then, the product pages looked broken because variant selectors didn't work.

The math is brutal: 19,000 products averaging $45 per item, 2.8% historical conversion rate. That's $23.9M in inventory that generated almost zero revenue. Not because customers didn't want the products. Because the data structure made them unfindable.

4. Incorrect Categorization (Or: Why Your Winter Coat Is In The Swimwear Section)

This is the one that makes customers question whether you know what you're selling.

Product categorization breaks down when:

- You let vendors assign categories without review

- Bulk imports use algorithmic categorization that's 73% accurate (which means 27% wrong)

- Multiple people categorize products using different mental models

- You reorganize your category structure but don't remap existing products

- Someone fat-fingers an entry and nobody catches it

I've seen bike helmets in "Kitchen & Dining." Laptop bags in "Pet Supplies." Kids' shoes in "Automotive Parts."

The revenue hit comes from two angles:

- Browse abandonment. A customer clicks "Women's Jackets," sees a men's jacket on page 2, questions whether your site actually has what they want, and leaves. Category page conversion rates drop 15-20% when even 5% of products are miscategorized.

- Filter failure. Customer applies filters: "Women's," "Size Large," "Blue." Your system returns 3 results—should be 24, but 21 are categorized wrong so they don't match the filter criteria. Customer assumes you don't have what they want. You just lost 21 possible sales.

Here's the part that stings: miscategorization isn't usually random. It clusters in certain product lines—often new arrivals, seasonal items, or vendor-sourced inventory. So you're most likely to lose sales on your freshest, highest-margin products.

The Framework: Stop The Bleeding In 90 Days

Alright. You're staring at this mess. Where do you even start?

Most operations teams try to boil the ocean—launch a 12-month "product data excellence initiative" with steering committees and phased rollouts. Meanwhile, they bleed revenue for another year.

Here's what works. I've run this playbook at three mid-market retailers. It stops the worst bleeding in 90 days and sets you up for long-term health.

Phase 1: Triage (Days 1-30)

Identify your most expensive problems first.

Pull three reports:

- Products with highest traffic but lowest conversion (likely data quality issues)

- Top 500 products by revenue—audit data completeness

- Products marked "out of stock" that show inventory in your WMS

These three reports will reveal 80% of your revenue-killing issues. Focus there first.

Create a "critical fix" queue. Anything in your top 500 revenue-drivers with data problems goes straight to the front of the line. You're not trying to fix everything—you're protecting your cash cows.

Assign one person—doesn't matter if it's an analyst, a data coordinator, whatever—to own this queue. Their only job for 30 days: fix top-revenue product data. Nothing else. You'll recover 3-5% of that lost 23% in the first month just by fixing your highest-value products.

Phase 2: Build The Rules Engine (Days 31-60)

This is where you stop playing whack-a-mole and build actual governance.

Document your standards:

- SKU naming convention (one convention, enforced everywhere)

- Required fields for product creation (title, description, category, at least 2 images, price, inventory)

- Category assignment rules (create a decision tree—if it has X attribute, it goes in Y category)

- Variant relationship requirements (no orphans allowed)

Then—and this is the part most teams skip—turn these standards into system validation rules. Your e-commerce platform can probably enforce this. If it can't, you need middleware that validates uploads before they touch the database.

No more "we'll clean it up later." The system should reject bad data at the point of entry.

During this phase, you're also running duplicate detection. There are tools for this (Informatica, Stibo, Akeneo for mid-market). But honestly? For 50K SKUs, you can brute-force it with Python and fuzzy matching. Pull your product list into a CSV, run it through a script that flags potential duplicates based on product name similarity and attribute matching, then have a human review the results.

Budget 80 hours of manual review time. It's worth it.

Phase 3: Fix The Structure (Days 61-90)

Now you're ready to systematically repair your catalog.

- Week 9: Reparent orphaned variants. Write a script that identifies variants without parents, then either creates parent records or reassigns variants to existing parents. This is tedious but not complicated. Budget 40 hours of effort.

- Week 10: Merge duplicate products. Take your duplicate detection results from Phase 2, merge the duplicates, set up 301 redirects from old URLs to canonical URLs, and update your sitemap. Another 40 hours.

- Week 11: Recategorize misplaced products. Use your category decision tree from Phase 2. This is the most manual part—you might need to recategorize 5,000-10,000 products. But here's the trick: start with the top-traffic miscategorized products. You'll get 70% of the benefit from fixing 30% of the problems.

- Week 12: Deploy and measure. Push changes live, then obsessively watch your metrics for a week. Site search conversion, category page conversion, inventory accuracy, recommendation click-through rate. They should all improve 8-15%.

If they don't, something's broken in your implementation. Go find it.

The Tools You Need (And Don't Need)

Let's talk tech stack, because this is where operators usually overspend.

You don't need:

- A $300K PIM system (not yet, anyway)

- AI-powered auto-categorization (it's 73% accurate, which means 27% of your products end up in the wrong place)

- A dedicated data governance team of 5 people

You do need:

- A single source of truth for product data (could be your e-commerce platform, could be a spreadsheet—doesn't matter, just needs to be singular)

- Validation rules that prevent bad data from entering the system

- A weekly audit process (30 minutes, someone checks the top 50 new products for data quality)

- A prioritization framework (fix high-revenue products first, always)

If you're managing 10K-100K SKUs, the sweet spot is usually a mid-tier PIM (Akeneo, Salsify, or Plytix run $20K-60K annually) combined with solid process discipline. The PIM handles validation, versioning, and multi-channel distribution. Process discipline ensures humans don't bypass the system.

The mistake most mid-market operators make? They buy the expensive PIM, skip the process discipline, and end up with expensive bad data instead of cheap bad data.

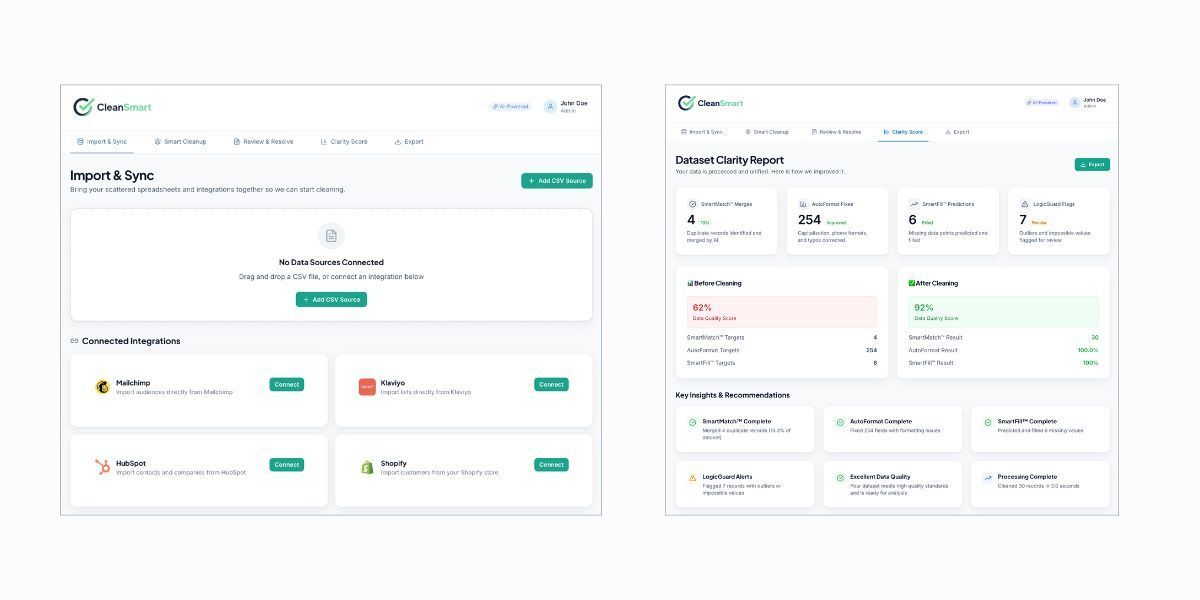

Stop losing revenue to bad data. CleanSmart helps e-commerce teams clean customer records. Direct Shopify, HubSpot, Klaviyo integrations.

The Metric That Matters

Here's how you know if this is working: inventory accuracy rate.

It's the single best proxy for overall product data health. If your inventory accuracy is 95%+, your product data is probably clean. If it's below 90%, you've got problems.

Calculate it weekly: (Number of products with accurate inventory / Total active products) × 100

Accurate inventory means: the quantity shown on your site matches what's actually in your warehouse within a tolerance of ±5 units.

Start measuring this now. You'll probably find you're at 78-85% accuracy. That's not unusual, but it's costing you 8-12% of revenue. Get that number above 95% and you'll claw back most of your losses.

The other metrics to watch:

- Search null result rate (searches that return zero results—should be <5%)

- Category page bounce rate (should drop as you fix categorization)

- Variant selection error rate (how often customers click a variant and get an error)

These are your early warning indicators. When they spike, it means product data is degrading again.

What Happens When You Fix This

Three months after implementing this framework at a $47M outdoor gear retailer, here's what changed:

- Site search conversion up 11.2%

- Category page conversion up 8.7%

- Inventory accuracy from 81% to 96%

- Customer service tickets about "can't find product" down 34%

- Revenue up $3.1M (attributed directly to data cleanup based on control group testing)

That's a 6.6% revenue increase. From fixing data.

The operations manager sent me an email six months in: "We're not chasing fires anymore. We're running the business." That's the shift. From reactive to proactive. From chaos to control.

Your product data might be a mess right now. But it's fixable. And the ROI is ridiculous—you're recovering revenue you already earned the right to capture, you just couldn't convert it because your data was broken.

Start with the triage phase. Pull those three reports tomorrow. Protect your top 500 revenue-driving products. Everything else can wait.

How do I convince leadership to invest in product data cleanup when it's not a "sexy" project?

Frame it as revenue recovery, not data hygiene. Show them the math: "We're losing $X in revenue to bad data. For $Y in effort, we recover 70% of that in 90 days." Use the 23% stat—on their revenue number. Make it real money, not percentages. And emphasize that this isn't a software purchase (leadership hates those), it's process + some manual labor.

We're on Shopify/BigCommerce/[platform]. Can we even enforce data validation rules?

Yes, but it depends on your platform tier. Most enterprise and plus plans let you set required fields, create custom validation, and use webhooks to check data on entry. If your platform doesn't support this natively, you'll need middleware—something like Zapier for simple checks or a custom app for complex validation. The key is: don't let bad data into the system. Block it at entry, not clean it up later. The MarTech Data Cleanliness & Reliability Checklist has a section on validation approaches by platform type.

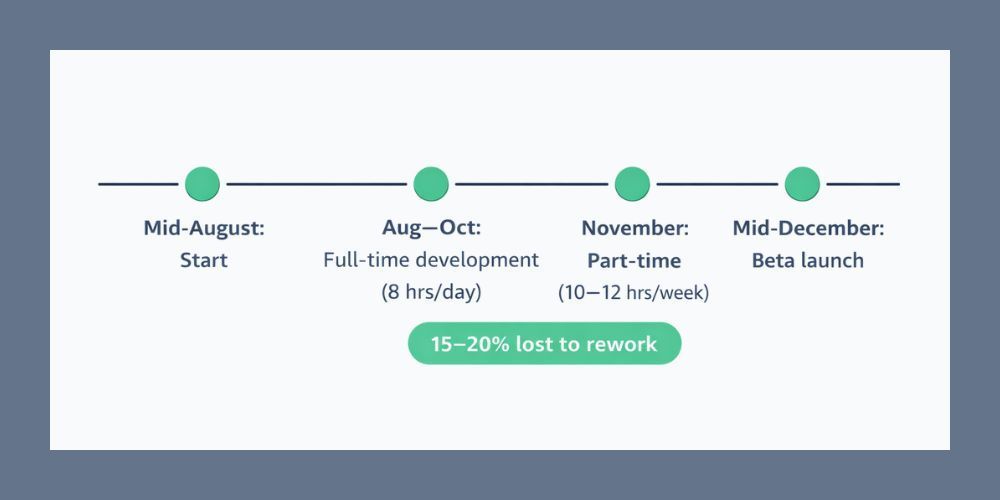

How much manual effort does this take? We're a lean team.

- Phase 1 (Triage): 40 hours total over 30 days—about 2 hours per day for one person.

- Phase 2 (Rules Engine): 60 hours—building standards, running duplicate detection

- Phase 3 (Structural Fixes): 120 hours—this is the heavy lift

So call it 220 hours total, or about 5-6 weeks of one FTE's time. Alternatively, you can sprint it with 2-3 people for a month. The ROI is 10x minimum if you're a $20M+ business, so even if you have to hire a contractor for 2 months, it pays for itself immediately.

Author: William Flaiz