The 4-Phase Data Cleanup Framework That Increased Deals by 7%

How a systematic approach to CRM data quality turned abandoned opportunities into closed revenue—without adding headcount or buying new tools.

Your sales team is working harder than ever. More calls. More emails. More pipeline activities logged into your CRM.

But deals keep slipping through the cracks.

A lead gets routed to the wrong rep because the territory field is blank. An automation workflow sends generic content to a high-value prospect because firmographic data is missing. A sales director pulls a forecast report that shows conflicting numbers because duplicate accounts are skewing the data.

Sound familiar?

At CT3, I watched this exact scenario play out. Despite having a sophisticated CRM and multiple marketing automation platforms, the sales organization was losing opportunities they didn't even know existed. The problem wasn't the technology—it was the data feeding that technology.

Six months after implementing a systematic data cleanup framework, monthly closed deals increased by 7%. Not through new lead sources. Not through additional sales headcount. Through better data quality that enabled better execution.

Here's the exact methodology we used.

The Hidden Cost of Dirty Data

Before we address the solution, let's quantify the problem.

When your CRM data is incomplete or inaccurate, three things happen:

First, automation fails. Marketing workflows designed to nurture leads can't execute when email addresses bounce, job titles are missing, or company size data is wrong. The automation runs, but it runs ineffectively—like a manufacturing line producing defective products.

Second, routing breaks. Lead assignment rules depend on accurate territory, industry, and account ownership data. When that data is missing or conflicting, leads sit in queues, get assigned to the wrong reps, or worse—get assigned to multiple reps simultaneously.

Third, reporting lies. When executives pull pipeline reports with duplicate accounts, inflated opportunity values, or missing stage data, they make strategic decisions based on fiction. The sales forecast becomes a creative writing exercise.

At CT3, we calculated the opportunity cost of these failures. Across a 12-month period, poor data quality had resulted in:

- 23% of marketing automation workflows failing to trigger properly

- 31% of inbound leads experiencing delayed or incorrect routing

- An average of 4.2 business days lost per misrouted lead

The solution wasn't more software. It was a systematic approach to data cleanup that could be maintained without constant manual intervention.

Phase 1: Audit (Manual Assessment)

The first phase sounds tedious because it is tedious. But you cannot fix what you haven't measured.

We exported core CRM data into spreadsheets and conducted manual reviews across five critical dimensions:

- Completeness: What percentage of records had all required fields populated? We defined "required" as any field that triggered automation, influenced routing, or appeared in executive reporting. At CT3, we discovered that 42% of lead records were missing at least one field that blocked automation execution.

- Accuracy: Were the values in populated fields correct? This required sampling—calling customers to verify phone numbers, checking company websites to validate job titles, cross-referencing LinkedIn to confirm employment status. Time-consuming, but revealing. We found a 19% error rate in fields we had assumed were accurate.

- Consistency: Did naming conventions match across records? Company names are notorious for inconsistency—"International Business Machines," "IBM," "IBM Corporation," and "I.B.M." all refer to the same company but create separate account records. We identified 347 duplicate accounts that had fragmented opportunity history and confused territory assignment.

- Timeliness: How old was the data? A job title from 2021 isn't reliable in 2024. Contact information degrades at roughly 30% annually in B2B databases. We flagged any record without activity in the past 18 months for verification or archiving.

- Relationships: Were parent-child account hierarchies correct? Enterprise sales depend on understanding corporate structure, but most CRMs struggle with complex organizational relationships. We found numerous subsidiary accounts incorrectly listed as independent companies, which broke territory rules and split opportunity tracking across multiple records.

The audit phase at CT3 took six weeks with two people working part-time. The output was a prioritized list of data quality issues ranked by business impact.

Phase 2: Prioritize (Focus on Automation Blockers)

With a comprehensive list of data problems, we resisted the urge to fix everything at once.

Instead, we applied a filtering methodology that focused resources on high-impact issues:

Impact Scoring: Each data quality problem received a score based on three factors:

- Revenue exposure (What's the pipeline value of affected opportunities?)

- Frequency (How many records have this problem?)

- Cascading effects (Does this issue cause other downstream problems?)

For example, missing territory data had high revenue exposure (affected $2.3M in pipeline), high frequency (31% of accounts), and cascading effects (broke routing AND reporting). It scored 27 out of 30 points.

Meanwhile, incomplete social media profile links had low revenue exposure (no direct pipeline impact), low frequency (only 8% of records), and no cascading effects. It scored 4 out of 30.

Automation Dependency Mapping: We identified every marketing automation workflow and sales process that depended on specific data fields. Then we cross-referenced our data quality findings against those dependencies.

This revealed that three fields—territory assignment, industry classification, and employee count—were blocking 18 different automation workflows. Fixing those three fields would unlock significantly more value than fixing dozens of lower-impact fields.

Effort Estimation: Some data problems are easy to fix programmatically. Others require manual research and validation. We categorized each issue as:

- Quick wins (Can be fixed with bulk updates or simple enrichment)

- Medium effort (Requires some manual review or external data sources)

- High effort (Needs extensive research or complex data transformation)

The prioritization matrix that emerged looked like this:

- High Impact + Quick Win =

Do immediately

High Impact + Medium Effort = Schedule next

High Impact + High Effort = Break into phases

Everything else = Backlog

This framework prevented the common mistake of starting with the easiest problems rather than the most important ones.

Phase 3: Automate (Validation Rules & Enrichment)

With priorities established, we moved to prevention and correction.

Validation Rules at Point of Entry: We implemented CRM validation rules that prevented incomplete records from being created in the first place. Before any form submission could create a new lead record, it had to include:

- Valid email format (not just presence of @ symbol, but domain validation)

- Complete company name (minimum 3 characters, no test entries)

- Industry selection (from predefined list, not free text)

- Country code (for proper territory routing)

These rules felt restrictive to the marketing team initially, but they prevented thousands of incomplete records from entering the system. Better to capture 85% of leads with complete data than 100% of leads with 40% missing critical fields.

Progressive Enrichment Workflows: Rather than trying to enrich all historical data at once, we created automated workflows that filled gaps progressively:

- When a lead engaged with content, trigger enrichment lookup

- When an opportunity reached a specific stage, validate and complete account data

- When a contact changed companies (detected via email bounce), update employment information

This approach spread the enrichment cost over time and focused resources on active records rather than dormant ones.

Duplicate Prevention: We configured matching rules that checked for existing records before creating new ones. The matching logic considered:

- Email domain similarity (to catch spelling variations)

- Company name fuzzy matching (to catch IBM vs. I.B.M.)

- Phone number normalization (to standardize formatting)

When a potential duplicate was detected, the system either merged automatically (for high-confidence matches) or flagged for manual review (for ambiguous cases).

The automation phase at CT3 reduced new data quality issues by 67% within the first month of implementation.

Phase 4: Maintain (Governance & Training)

The final phase is what separates temporary fixes from lasting improvements.

Regular Audit Cadence: We established quarterly data quality audits using the same methodology from Phase 1. This created a baseline for measuring improvement and catching new issues before they became systemic problems.

Each quarterly audit included:

- Random sampling of 200 records across all sources

- Automated data quality reports comparing current vs. previous quarter

- Review of validation rule effectiveness (were they preventing bad data or just frustrating users?)

User Training: The sales and marketing teams received training on why data quality mattered and how their daily actions affected it. We avoided abstract concepts and focused on specific examples:

- "When you abbreviate company names inconsistently, leads get routed to the wrong rep"

- "When you skip industry classification, this prospect won't receive relevant nurture content"

- "When you create a new account instead of finding the existing one, pipeline reporting becomes inaccurate"

Training wasn't a one-time event. We incorporated data quality reminders into onboarding for new hires and created quick reference guides accessible from within the CRM.

Accountability Metrics: We added data quality metrics to team dashboards:

- Marketing: Percentage of leads with complete scoring data

- Sales: Percentage of opportunities with accurate close dates and next steps

- Operations: System-wide completeness score and duplicate account rate

These metrics weren't punitive—they were diagnostic. When scores dropped, it signaled either a process breakdown or a need for additional training.

Tool Optimization: Every six months, we reviewed our data enrichment tools and validation rules. Were they still catching the right issues? Had new data quality problems emerged that required new rules? Did we need different enrichment sources?

This continuous improvement mindset prevented the framework from becoming stale or irrelevant as business needs evolved.

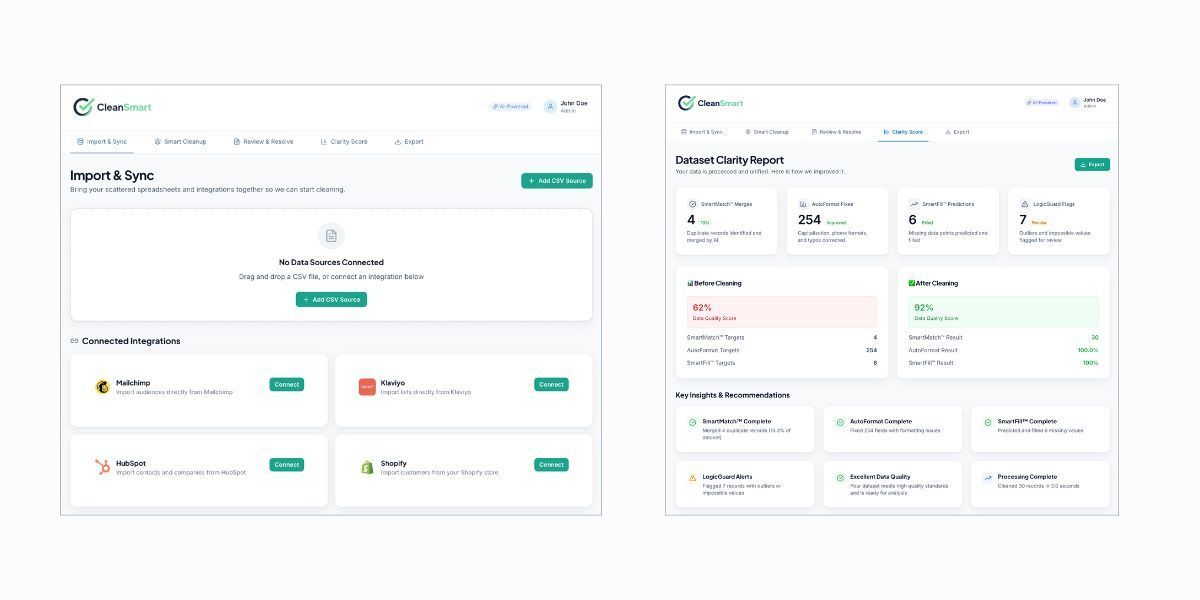

Want to automate this framework?

The Audit → Prioritize → Automate → Maintain methodology works.

CleanSmart handles phases 1-3 automatically.

The Results: 7% Monthly Deal Increase

Six months after implementing this four-phase framework, CT3 saw quantifiable improvements:

- Monthly closed deals increased by 7%

- Lead-to-opportunity conversion improved by 12%

- Average sales cycle shortened by 8 days

- Marketing automation engagement rates increased by 23%

- Executive pipeline reporting accuracy improved by 34%

The most telling metric? Sales rep satisfaction scores for CRM data quality improved from 4.2 to 7.8 out of 10. When the people using your data trust that data, they use it more effectively.

The financial impact was clear. With an average deal size of $47,000, that 7% monthly increase translated to roughly $850,000 in additional annual revenue—all from making better use of existing leads and opportunities.

Which Phase Is Your Team Stuck On?

Most organizations recognize they have a data quality problem. Few have a systematic framework for solving it.

The common mistake is jumping straight to Phase 3 (buying enrichment tools or adding validation rules) without completing Phase 1 (understanding what's actually broken) or Phase 2 (prioritizing based on business impact).

Another common trap is treating data cleanup as a project with a defined end date rather than an ongoing discipline. Phase 4 (maintenance) is what determines whether your improvements last beyond the initial cleanup effort.

If you're dealing with incomplete CRM data, poor lead routing, or underperforming marketing automation, this framework provides a structured approach to diagnosis and resolution.

The question isn't whether you need better data quality. The question is: where do you start?

Related Reading:

- AI in CRM Data Analysis – How AI accelerates the data cleanup process

- Using AI to Analyze CRM Data – Advanced techniques for ongoing data quality monitoring

- Free MarTech Data Cleanliness & Reliability Checklist – Download the complete audit framework

How long does it take to implement this 4-phase framework?

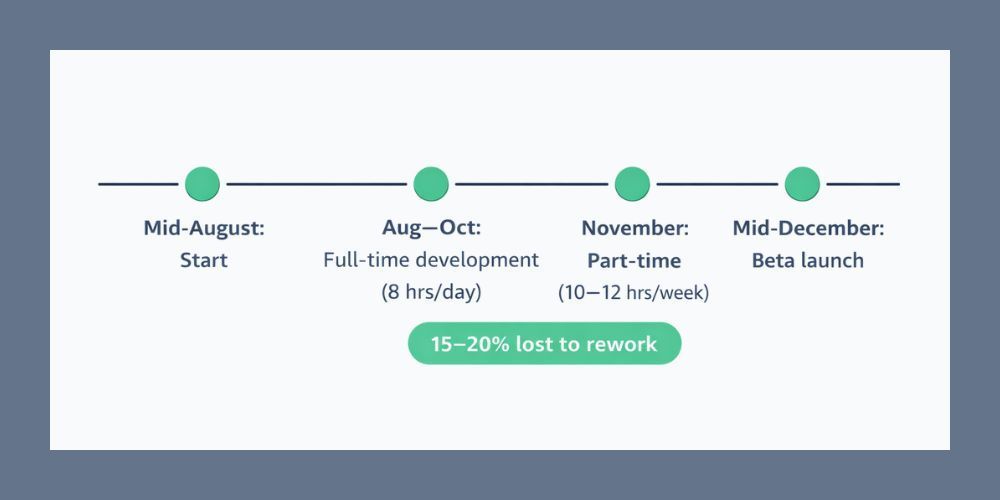

- Phase 1 (Audit) typically takes 4-6 weeks with dedicated resources.

- Phase 2 (Prioritize) can be completed in 1-2 weeks once audit data is available.

- Phase 3 (Automate) spans 8-12 weeks depending on CRM complexity and technical resources.

- Phase 4 (Maintain) is ongoing but requires approximately 10-15 hours per quarter for audits and optimization.

Most organizations see measurable improvements within 90 days of starting Phase 3 implementation.

Can this framework work without expensive data enrichment tools?

Yes. While enrichment tools accelerate the process, the framework's core value comes from systematic diagnosis and prioritization. At CT3, we achieved significant results using manual spreadsheet audits, native CRM validation rules, and free enrichment sources before investing in premium tools. Start with the methodology, then add tools as budget allows and ROI justifies.

What's the biggest mistake companies make when trying to fix CRM data quality?

Skipping Phase 2 (Prioritize). Organizations often try to fix every data problem simultaneously, spreading resources thin and seeing minimal impact. The 80/20 rule applies—typically 20% of data quality issues cause 80% of business problems. Focus on high-impact issues first, particularly those blocking automation or routing. You'll see faster results and build momentum for broader cleanup efforts.

Author: William Flaiz