Why Your Platform Migration Failed (And It Wasn't the Platform)

Your CRM migration didn't fail because you chose the wrong platform. It failed because you moved 47,000 duplicate records, blank fields, and seven years of garbage data to a shiny new system.

So here's what happens.

A company spends 18 months and $2.1 million migrating from their legacy CRM to Salesforce. The implementation partner promises streamlined workflows, better reporting, and improved sales productivity. The executive team gets demos showing clean dashboards and automated processes. Everyone's excited.

Six months after go-live, sales cycle length hasn't improved. Lead conversion rates are the same or worse. The VP of Sales complains that reps still can't find accurate customer information. Marketing can't target campaigns because contact data is still a mess. The CFO starts asking uncomfortable questions about ROI.

The blame game starts. Sales says the implementation partner configured it wrong. The implementation partner says users need more training. IT says people aren't following the new processes. Leadership starts wondering if they should have chosen HubSpot instead.

Nobody mentions the 47,000 duplicate contact records they migrated. Or the 23% of accounts with blank industry fields. Or the fact that "lead source" had 47 different values because seven regional teams each created their own.

The platform wasn't the problem. The data that moved with it was.

The pattern repeats itself

I've seen this across pharmaceutical companies, financial services firms, and B2B SaaS organizations. The specifics change but the story stays the same—big migration budget, careful vendor selection, detailed requirements, professional implementation, disappointing results.

One healthcare organization spent $1.8 million moving from Siebel to Salesforce. They hired a top-tier consulting firm. They did change management training. They even had executive sponsors and a steering committee. The technical migration was flawless—not a single record lost, zero downtime, perfect data mapping.

But when we audited their data six months post-migration, we found that 31% of their "opportunity" records had no associated contact. Another 18% had contacts with invalid email addresses that had been bouncing for years. Their "account health score" field, which was supposed to drive their customer success strategy, was blank in 64% of records.

The Salesforce implementation was perfect. The data that went into it was garbage.

Another financial services client migrated from Microsoft Dynamics to Salesforce, spending $2.3 million. They were convinced Dynamics was holding them back—reports were slow, the interface was clunky, salespeople hated it. Salesforce would fix everything.

Post-migration, their sales team still couldn't run accurate pipeline reports. Why? Because their "opportunity stage" field had been manually entered for five years with no data validation. We found 89 different values for what should have been 6 standard stages. Someone typed "Closeing Soon" (note the typo) into 1,247 opportunity records. That typo migrated perfectly to their new $2.3 million platform.

The platform worked fine. The humans who created the data didn't follow any standards.

Why executives keep making this mistake

There's a weird cognitive bias that happens with enterprise software. When performance is bad, we assume the technology is the limiting factor. Our brains want a clean culprit—this tool is old, that vendor doesn't innovate, these features are missing.

Data quality problems are messier. They implicate people, processes, training gaps, organizational silos, and years of accumulated shortcuts. Nobody wants to tell the board that the real issue is that the marketing team in Germany classifies leads differently than the team in the US, and both are different from how Asia-Pacific does it.

So we blame the platform. It's easier.

But the math doesn't work. If your data is 68% accurate in Microsoft Dynamics, it'll be 68% accurate in Salesforce. The platform doesn't clean your data during migration—it just moves it to a prettier interface with better reporting tools. Those better reporting tools then show you, with crystal clarity, exactly how dirty your data is.

I'm not saying the platform choice doesn't matter. It does. But if you're choosing between modern enterprise CRMs, the performance difference between Salesforce, HubSpot, Microsoft Dynamics, or Zoho isn't what's preventing your sales team from hitting quota. The difference between 94% data accuracy and 68% data accuracy? That's killing you.

The operational diagnostic framework

Here's how to determine if your migration failed because of data quality rather than platform choice. These are specific metrics you can measure right now, regardless of which CRM you're using.

Duplicate rate analysis

Pull your complete contact database. Count how many unique email addresses you have. Then count how many total contact records exist. If those numbers are different by more than 3%, you have a duplicate problem that's affecting your operations.

We did this for a pharmaceutical client with 127,000 contact records. They had 89,000 unique email addresses. That meant 38,000 duplicate records—30% of their entire database. Their sales reps were logging activities on different versions of the same contact. Marketing was sending duplicate emails. Pipeline reports were inflated because the same opportunity was associated with multiple duplicate contacts.

The duplicate rate should be under 3%. If it's over 10%, your data quality is actively preventing your sales team from functioning correctly, regardless of which platform you're using.

Field completion audit

Identify the 10 most business-critical fields in your CRM. For B2B, this typically includes: account name, industry, annual revenue, number of employees, primary contact name, contact title, contact email, contact phone, account owner, and account status.

Calculate the percentage of records where each field is populated with valid data (not just "Unknown" or "N/A" or random characters someone typed to get past a required field). If any of these critical fields is below 85% completion, that field is unreliable for reporting or automation.

One B2B software client had "industry" filled in for 91% of accounts. Sounds good, right? Then we analyzed the actual values. We found 247 different industry classifications for what should have been 15 standard options. "Technology" appeared 4,891 times. "Tech" appeared 1,247 times. "Software" appeared 2,103 times. All three should have been the same category. Their "industry-based" marketing campaigns were hitting less than 60% of their intended targets because the targeting rules couldn't account for every variation.

Field completion percentage doesn't matter if the completed fields contain inconsistent garbage.

Data standardization measurement

Pick three fields that should have controlled values: lead source, opportunity stage, and account status are common ones. Export all unique values for each field. Count them.

For lead source, you should have 10-15 standard options. If you have 40+, your data isn't standardized. For opportunity stage, 5-8 standard stages is typical. If you have 25+, your pipeline reports are meaningless. For account status, 4-6 categories is standard. If you're seeing 15+, nobody can reliably segment your customer base.

We audited a financial services firm that had 67 unique values in their "lead source" field. Some highlights: "Webinar," "webinar," "Webinar - Q3," "Q3 Webinar," "September Webinar," "Sept Webinar," "Webinar (Sept)," and "Webinar-September." All different values. All meaning the same thing. Their marketing attribution reporting was worthless because nobody could aggregate performance by channel.

Data decay analysis

Pull all contact records that haven't been updated in the past 12 months. Calculate what percentage of your total database this represents. If it's over 30%, you have significant data decay. People change jobs, email addresses become invalid, phone numbers change, companies get acquired. Old data is often wrong data.

Then calculate your email bounce rate for the records that haven't been touched in 12+ months. If it's over 5%, your data is rotting faster than you're maintaining it. A healthcare client had 41% of their contact database untouched for over 18 months. When marketing tried to run a campaign to that segment, 23% of emails bounced. They were maintaining 52,000 dead records that inflated their "database size" but delivered zero value.

Cross-system data consistency

If you have multiple systems that should contain matching data (CRM + marketing automation + customer success platform), pull the same 100 records from each system. Check whether critical fields match across platforms.

Account name matches? Contact title matches? Industry classification matches? If consistency is below 90%, you have a data synchronization problem that no single platform migration will fix. We found this at a B2B SaaS company where the same customer had three different industry classifications across their three main systems. Their "customer health score" algorithm was pulling industry-specific benchmarks, but getting different inputs from each platform. The algorithm was mathematically perfect. The data feeding it was contradictory.

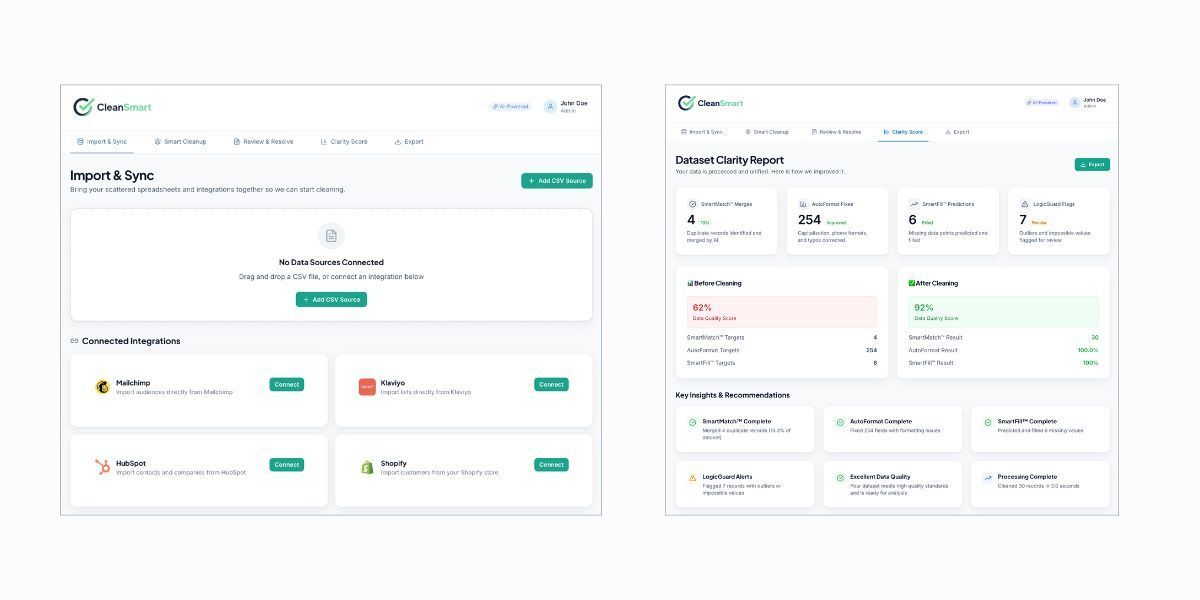

Planning a migration?

Clean your data first.

CleanSmart processes 10,000+ records per minute.

Don't migrate your problems.

What this means for your migration project

If you run these diagnostics and find significant data quality issues, here's the truth: migrating platforms won't fix them. You'll just move bad data to a new system, spend millions on the migration, and end up with the same operational problems you had before.

The fix isn't sexy. It's not a vendor evaluation matrix or a requirements document or a demo from a hot new platform. The fix is data remediation: deduplication, standardization, validation, and ongoing governance. It takes time. It requires cross-functional coordination. It's not a one-time project—it's a continuous discipline.

But the ROI is better than any platform switch. That pharmaceutical client with 30% duplicate records? After cleanup, their sales team reported finding accurate customer information 89% of the time, up from 54%. Lead response time dropped 23% because reps weren't sorting through duplicate records trying to figure out which one was current. Pipeline reporting became reliable enough to forecast accurately.

The financial services firm with 67 different "lead source" values? After standardization, marketing attribution reporting became accurate for the first time in five years. They reallocated budget from underperforming channels to high-performing ones, improving lead generation efficiency by 34% without increasing spend.

The B2B software company with 247 industry classifications? Once standardized, their industry-targeted campaigns reached 94% of intended recipients instead of 60%. Conversion rates increased 28% because messaging was properly tailored to each vertical.

None of these improvements required a platform migration. They required fixing the data.

The uncomfortable conversation

Look, I've led platform migrations. Sometimes you legitimately need to switch systems—maybe your current vendor isn't investing in the product, maybe you've outgrown the platform's capabilities, maybe the architecture is so old that it can't integrate with modern tools you need.

But if you're considering a migration primarily because "performance is bad" or "users don't like it" or "reporting doesn't work," run these diagnostics first. Measure your duplicate rate, field completion, standardization, data decay, and cross-system consistency. Be honest about what you find.

If your data quality scores are below 85% on these measures, migrating platforms will just move your problem to a new environment. You'll spend $1-3 million and 12-18 months only to discover that the new platform has the same reporting problems, the same user complaints, and the same performance issues as the old one.

Because the platform was never the problem. The data was.

Fix the data first. Then decide if you still need a new platform.

How do I calculate an accurate duplicate rate if my CRM doesn't have a built-in deduplication tool?

Export your entire contact database to a spreadsheet or CSV file. Use the email address field as your unique identifier, since each person should only have one business email.

Count the total number of rows (total contacts), then count the number of unique email addresses using a pivot table or the COUNTUNIQUE function. Divide unique emails by total contacts, then subtract from 1 to get your duplicate percentage. For example, if you have 50,000 contacts but only 40,000 unique emails, your duplicate rate is 20%.

This method isn't perfect—it won't catch duplicates with different email addresses for the same person—but it gives you a baseline measurement to track improvement over time.

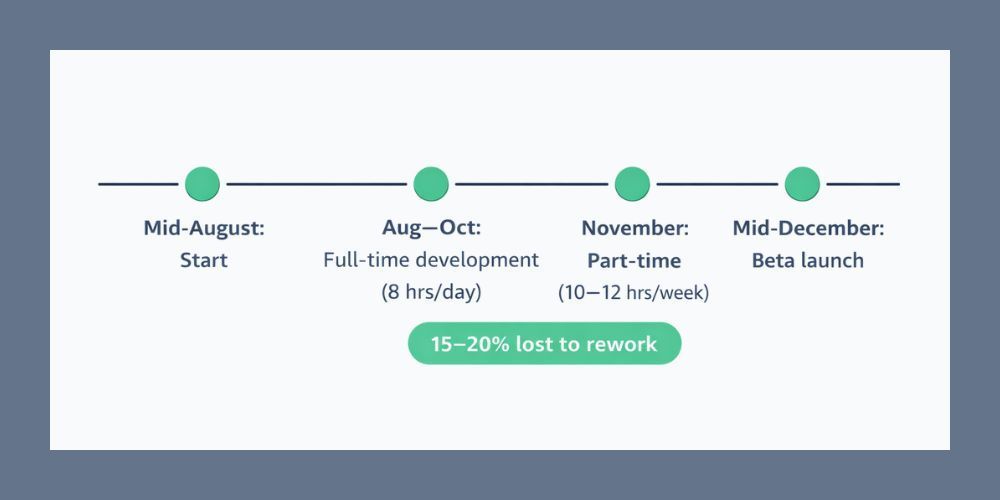

What's a realistic timeline for cleaning up data quality issues before attempting a platform migration?

For a mid-sized B2B company (10,000-100,000 contacts), expect 3-6 months for comprehensive data remediation if you're starting from poor quality (<70% accuracy).

The first month involves auditing and scoping the problem—running diagnostics, identifying data sources, and establishing governance rules. Months 2-4 focus on cleanup: deduplication, standardization, field mapping, and validation. Months 5-6 involve testing new processes, training teams on data entry standards, and implementing ongoing maintenance protocols. Larger organizations (100,000+ contacts) often need 9-12 months.

Don't rush this timeline—cleaning data while simultaneously migrating platforms typically results in both efforts failing. Do one, then the other.

Should we hire a data quality consultant or handle cleanup internally with our existing team?

The decision depends on your internal team's capacity and expertise, not just budget. If your team already understands data architecture, has experience with deduplication tools, and can dedicate 20-30 hours per week to the project, internal cleanup is viable and gives you long-term capability building.

However, most teams underestimate the complexity of enterprise data quality projects. A specialized consultant brings deduplication tools, established cleanup methodologies, and experience with common data quality pitfalls specific to your industry. They're also more likely to establish sustainable governance processes rather than just one-time cleanup.

Consider a hybrid approach: bring in a consultant to establish the framework and train your team, then handle ongoing maintenance internally. This builds internal capability while ensuring the initial cleanup is done correctly.

Author: William Flaiz